Hadoop vs spark choosing the right framework

With modern companies relying on a wealth of knowledge to better understand their customers and the industry, innovations such as Big Data are gaining tremendous traction.

Like AI, Big Data has not only landed on the list of top tech trends for 2020, but both start-ups and Fortune 500 businesses are expected to adopt it to enjoy rapid market growth and ensure greater consumer loyalty. Now, while everyone is highly motivated on the one hand to replace their conventional data analytics tools with Big Data-the one that prepares the ground for Blockchain and AI development, they are still puzzled about selecting the right Big Data tool. Apache Hadoop and Spark, the two titans of the Big Data universe, are facing the dilemma of picking.

So, given this idea, today we’re going to cover an article about Apache Spark vs Hadoop and help you find out which one is the right choice for your needs.

But, first, let’s give a brief introduction to what Hadoop and Spark are all about,More info go through big data hadoop course.

Apache Hadoop

Apache Hadoop is an open-source, distributed, and Java-based platform allowing users to use simple programming constructs to store and process big data through several device clusters. It consists of different modules that work together to provide an improved experience, which is as follows.

- Common Hadoop

- Distributed Hadoop File System (HDFS)

- YARN of Hadoop

- MapReduce Hadoop

Apache Spark

Apache Spark, however, is an open-source distributed big-data cluster computing platform that is ‘easy-to-use’ and provides faster services.

Because of the set of possibilities they bring, the two big data architectures are funded by several large corporations.

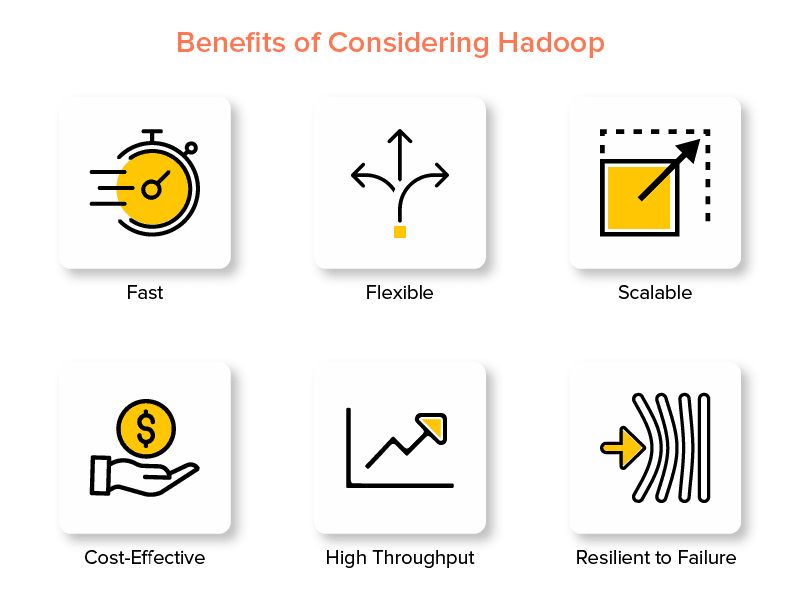

Benefits of Hadoop Consideration

1. Quick

One of Hadoop’s characteristics that makes it popular in the world of big data is that it is easy.

The method of storage is based on a distributed file system that primarily maps information wherever a cluster is located. Also, on the same server, data, and software used for data processing are typically available, making data processing a hassle-free and quicker task.

Hadoop was found to be able to process terabytes of unstructured data in just a few minutes, while petabytes can be processed in hours.

2. FLEXIBLE

Hadoop provides high-end versatility, unlike conventional data processing tools.

It helps organizations to collect data from various sources (such as social media, emails, etc.), work with different types of data (both structured and unstructured), and gain useful insights for various purposes (such as log processing, consumer campaign research, detection of fraud, etc.).

3. Scaleable

Another benefit of Hadoop is that it is incredibly scalable. Unlike conventional relational database systems (RDBMS), the platform allows organizations to store and distribute massive data sets from hundreds of parallel-operating servers.

4. Cost-Successful

When compared to other big data analytics software, Apache Hadoop is much cheaper. This is because no specialized machine is required; it runs on a commodity hardware group. Also, in the long run, it is easier to add more nodes.

In other words, one case easily increases nodes without suffering from any downtime of requirements for pre-planning.

5. High performance

Data is stored in a distributed way in the case of the Hadoop system, such that a small job is separated into several pieces of data in parallel. This makes it possible for companies to get more jobs completed in less time, resulting in higher throughput eventually.

6. Failure-resilient

Last but not least, Hadoop provides options for high fault tolerance that help to minimize the effects of failure. It stores a replica of each block, which allows data to be retrieved if any node goes down.

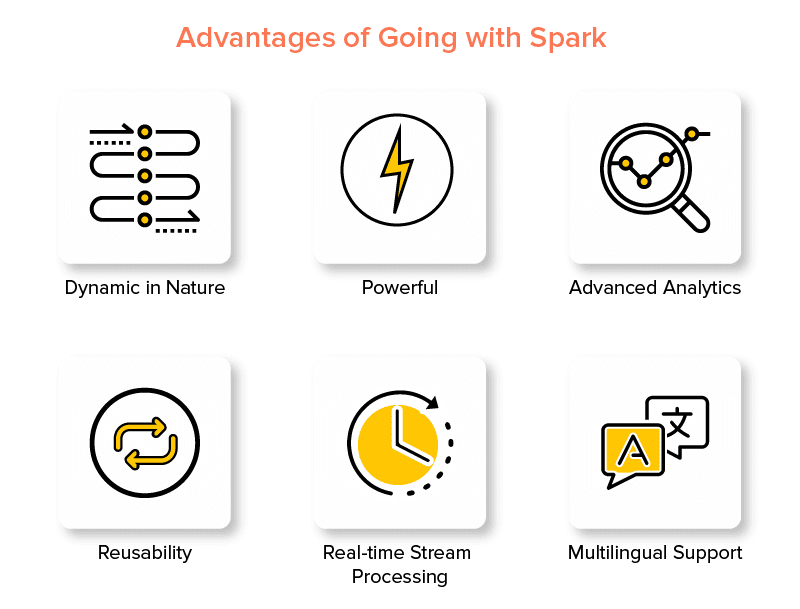

Apache Spark System benefits

1. In Nature Complex

As Apache Spark provides about 80 high-level operators, it can be dynamically used for data processing. The best Big Data tool to create and manage parallel apps can be considered.

2. Strong ones

It can handle numerous analytics challenges due to its low-latency in-memory data processing capability and availability of different built-in libraries for machine learning and graph analytics algorithms. This makes it a good business preference for big data to go with.

3. Advanced Analytics Section

Another distinctive aspect of Spark is that not only ‘MAP’ and ‘reduce’ are promoted, but also Machine Learning (ML), SQL queries, graph algorithms, and data streaming are enabled. This makes it appropriate to enjoy advanced analytics.

4. The reusability

Unlike Hadoop, it is possible to reuse Spark code for batch processing, run ad-hoc stream state queries, join streams against historical data, and more.

5. Production of Real-time Stream

Another benefit of going with Apache Spark is that it allows real-time information handling and processing.

6. Multilingual Assistance

Last but not least, several coding languages, including Java, Python, and Scala, support this Big Data Analytics tool.

Apache Spark vs Apache Hadoop

So, let’s wait no more and head for their comparison to see which one is leading the battle of ‘Spark vs Hadoop.’

1. Architecture in the Spark and Hadoop

The latter leads when it comes to Spark and Hadoop architecture, even when both function in a distributed computing environment.

This is because Hadoop’s architecture has two primary components, HDFS (Hadoop Distributed File System) and YARN (Yet Another Resource Negotiator), unlike Spark. Here, HDFS manages massive data storage through different nodes, while YARN takes care of processing tasks through resource allocation and frameworks for job scheduling. In order to provide better solutions for services such as fault tolerance, these components are then further divided into more components.

2. Simplicity of Use

In their development environment, Apache Spark helps developers to implement different user-friendly APIs, such as Scala, Python, R, Java, and Spark SQL. It also comes loaded with an interactive mode which supports users as well as developers. It makes it easy to use and has a low learning curve.

Whereas, it provides add-ons to assist users while talking about Hadoop, but not an interactive mode. In this ‘big data’ battle, this causes Apache Spark to win over Hadoop.

3. Tolerance to Fault and Defense

Although both Apache Spark and Hadoop MapReduce have equipment for fault tolerance, the latter wins the fight.

This is because if a process crashes in the middle of the Spark environment procedure, one must start from scratch. But, when it comes to Hadoop, from the moment of the crash itself, they will proceed.

4. Performance

The former wins over the latter when it comes to considering the performance of Spark vs. MapReduce.

The Apache Spark device will run 10 times faster on the disc and 100 times faster on the memory. This allows 100 TB of data to be handled 3 times faster than Hadoop MapReduce.

5. Processing Data

Data processing is another aspect to remember during the Apache Spark vs Hadoop comparison.

While Apache Hadoop only provides an opportunity for batch processing, the other big data platform allows interactive, iterative, stream, graph, and batch processing to operate. Anything that shows that for better data processing facilities, Spark is a better choice to go for.

6. Compatibility

Spark and Hadoop MapReduce are somewhat similar in their compatibility.

Although both big data systems often serve as standalone applications, they can also run together. Spark can run on top of Hadoop YARN effectively, while Hadoop can combine with Sqoop and Flume easily. Both accept the data sources and file formats of each other because of this.

7. Security

Various protection features such as event logging and the use of java servlet philtres for protecting web UIs are loaded into the Spark environment. It also promotes authentication through shared secrets and, when integrated with YARN and HDFS, can leverage the potential of HDFS file permissions, inter-mode encryption, and Kerberos.

Hadoop, on the other hand, supports Kerberos authentication, third-party authentication, traditional file permissions, and access control lists, and more, delivering better security results finally. So, the latter leads when considering the comparison of Spark vs. Hadoop in terms of defense.

8. Cost-Efficiency

When Hadoop and Apache Spark are compared, the former needs more disc memory, while the latter requires more RAM. Also, in contrast to Apache Hadoop, since Spark is very recent, developers working with Spark are rarer.

It makes partnering with Spark a costly affair. In other words, when one focuses on Hadoop vs. Spark cost, Hadoop provides cost-effective solutions.

9. Scope of Business

Although both Apache Spark and Hadoop are supported by large corporations and have been used for various purposes, in terms of business reach, the latter leads.

Conclusion

I hope you reach to a conclusion about Hadoop and spark. You can learn more through Big Data and Hadoop Online Training.

No comments: