Snowflake Data Warehouse 101: A Comprehensive Guide

Snowflake is a Cloud-Based Data Warehouse that was founded in 2012 by three data warehousing experts. Six years later, the company received a $450 million venture capital investment, valuing it at $3.5 billion. But what exactly is Snowflake Data Warehouse, and why is this Cloud-Based Data Warehouse causing such a stir in the analytics world?

This article will give you a comprehensive guide to Snowflake Data Warehouse. You will get to know about the architecture and performance of Snowflake Data Warehouse. You will also explore the Features, Pricing, Advantages, Limitations, and many more in further sections. Let’s get started.

What is Snowflake Data Warehouse?

Snowflake Data Warehouse is a fully managed, cloud data warehouse available to customers in the form of Software-as-a-Service (SaaS) or Database-as-a-Service (DaaS). The phrase ‘fully managed’ means users shouldn’t be concerned about any of the back-end work like server installation, maintenance, etc. A Snowflake Data Warehouse instance can easily be deployed on any of the three major cloud providers –

- Amazon Web Services (AWS)

- Google Cloud Storage (GCS)

- Microsoft Azure

The customer can select which cloud provider they want for their Snowflake instance. This comes in handy for firms working with multiple cloud providers. Snowflake querying follows the standard ANSI SQL protocol and it supports fully structured as well as semi-structured data like JSON, Parquet, XML, etc.

Become a Snowflake Certified professional by learning this HKR Snowflake Training !

Architecture of Snowflake Data Warehouse

Here’s a diagram depicting the fundamental Snowflake architecture –

At the storage level, there exists cloud storage that includes both shared-disk (for storing persistent data) as well as shared-nothing (for massively parallel processing or MPP of queries with portions of data stored locally) entities. Ingested cloud data is optimized before storing in a columnar format. The data ingestion, compression, and storage are fully managed by Snowflake; as a matter of fact, this stored data is not directly accessible to users and can only be accessed via SQL queries.

Next up is the query processing level, this is where the SQL queries are executed. All the SQL queries are part of a particular cluster that consists of several compute nodes (this is customizable) and are executed in a dedicated, MPP environment. These dedicated MPPs are also known as virtual data warehouses. It is not uncommon for a firm to have separate virtual data warehouses for individual business units like sales, marketing, finance, etc. This setup is more costly but it ensures data integrity and maximum performance.

Finally, we have cloud services. As mentioned in the boxes, these are a bunch of services that help tie together the different units of Snowflake ranging from access control/data security to infrastructure and storage management.

Performance of Snowflake Data Warehouse

The Snowflake Features has been designed for simplicity and maximum efficiency via parallel workload execution through the MPP architecture. The idea of increasing query performance is switched from the traditional manual performance tuning options like indexing, sorting, etc. to following certain generally applicable best practices. These include the following –

- Workload Separation

- Persisted or Cached Results

1. Workload Separation

Because it is super easy to spin up multiple virtual data warehouses with the desired number of compute nodes, it is a common practice to divide the workloads into separate clusters based on either Business Units (sales, marketing, etc.) or type of operation (data analytics, ETL/BI loads, etc.) It is also interesting to note that virtual data warehouses can be set to auto-suspend (default is 10 minutes) when they go inactive or in other words, no queries are being executed. This feature ensures that customers don’t accrue a lot of costs while having many virtual data warehouses operate in parallel.

2. Persisted or Cached Results

Query results are stored or cached for a certain timeframe (default is 24 hours). This is utilized when a query is essentially re-run to fetch the same result. Caching is done at two levels – local cache and result cache. Local cache provides the stored results for users within the same virtual data warehouse whereas result cache holds results that could be retrieved by users regardless of the virtual data warehouse they belong to.

ETL and Data Transfer in Snowflake Data Warehouse

ETL refers to the process of extracting data from a certain source, transform the source data to a certain format (typically the format that matches up to the target table) and load this data into the desired target table. The source and target are often two different entities or database systems. Some examples include a flat-file load into an Oracle table, a CRM data export into an Amazon Redshift table, data migration from a Postgres database onto a Snowflake Data Warehouse, etc.

Snowflake has been designed to connect to a multitude of data integrators using either a JDBC or an ODBC connection.

In terms of loading data, Snowflake offers two methods –

- Bulk Loading

This is basically batch loading of data files using the COPY command. COPY command lets users copy data files from the cloud storage into Snowflake tables. This step involves writing code that typically gets scripted to run at scheduled intervals. - Continuous Loading

In this case, smaller amounts of data are extracted from the staging environment (as soon as they are available) and loaded in quick increments into a target Snowflake table. The feature named Snowpipe makes this possible.

Snowflake offers a bunch of transformation options for the incoming data before the load. This is achieved through the COPY command. Some of these include –

Reordering of columns

- Column omissions

- Casting columns in the select statement

When it comes to dealing with these intricacies of ETL, it is best to implement a fully managed Data Integration Software solution like Hevo.

Simplify ETL using Hevo’s No-code Data Pipeline

Hevo Data helps you directly transfer data from 100+ data sources (including 30+ free sources) to Snowflake, Business Intelligence tools, Data Warehouses, or a destination of your choice in a completely hassle-free & automated manner. Hevo is fully managed and completely automates the process of not only loading data from your desired source but also enriching the data and transforming it into an analysis-ready form without having to write a single line of code. Its fault-tolerant architecture ensures that the data is handled in a secure, consistent manner with zero data loss.

Hevo takes care of all your data preprocessing needs required to set up the integration and lets you focus on key business activities and draw a much powerful insight on how to generate more leads, retain customers, and take your business to new heights of profitability. It provides a consistent & reliable solution to manage data in real-time and always have analysis-ready data in your desired destination.

Check out what makes Hevo amazing:

- Secure: Hevo has a fault-tolerant architecture that ensures that the data is handled in a secure, consistent manner with zero data loss.

- Schema Management: Hevo takes away the tedious task of schema management & automatically detects the schema of incoming data and maps it to the destination schema.

- Minimal Learning: Hevo, with its simple and interactive UI, is extremely simple for new customers to work on and perform operations.

- Hevo Is Built To Scale: As the number of sources and the volume of your data grows, Hevo scales horizontally, handling millions of records per minute with very little latency.

- Incremental Data Load: Hevo allows the transfer of data that has been modified in real-time. This ensures efficient utilization of bandwidth on both ends.

- Live Support: The Hevo team is available round the clock to extend exceptional support to its customers through chat, email, and support calls.

- Live Monitoring: Hevo allows you to monitor the data flow and check where your data is at a particular point in time.

Scaling on Snowflake Data Warehouse

Previously, the article briefly touched on virtual data warehouses, clusters, nodes, etc. Now, let’s dive deeper into these areas to better understand how can one tweak these to enable scaling most efficiently.

Snowflake provides for two kinds of scaling –

- Scaling up

- Scaling out

1. Scaling up

Scaling up means resizing a virtual data warehouse in terms of its nodes. A Snowflake Data Warehouse user can easily modify the number of nodes assigned to a virtual data warehouse. This can be done even while the data warehouse is in operation, although, only the queries that are newly submitted or the ones already queued will be affected by the changes. Apart from the ‘auto suspend’ feature described before, there is a provision to set the minimum and a maximum number of nodes per warehouse.

After setting the maximum and the minimum number of nodes, let Snowflake decide when to scale up or down the number of nodes based on the warehouse activity. This is an efficient way to set up your cluster. Scaling is particularly suitable in the following cases –

- To improve query performance in case of larger and more complex queries.

- When the queries are submitted using the same local cache.

- The option to scale out is not there.

Scaling out is generally preferred, especially with the more recent addition and availability of multi-cluster warehouses, which will be discussed next.

2. Scaling out

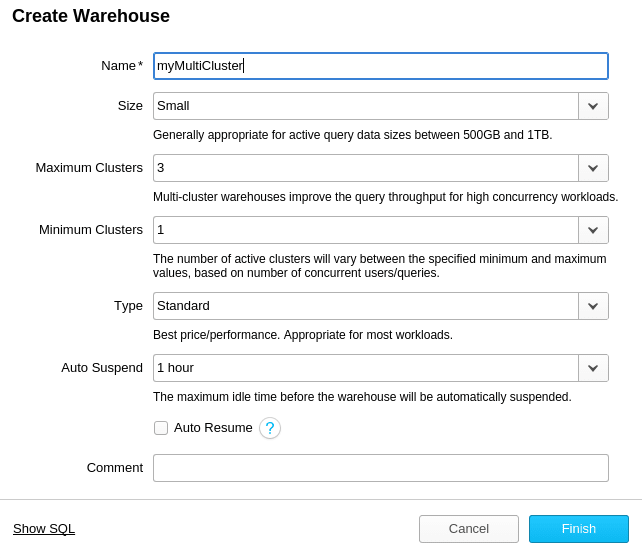

Scaling out before referred to adding more virtual data warehouses. However, with the advent of the recent multi-cluster warehouse feature, the old way has become more or less obsolete. So let’s get into the multi-cluster warehouse set up – as the name suggests, in this type of arrangement, a data warehouse can have multiple clusters each having a different set of nodes. Even though Snowflake provides for a ‘maximized’ option, which is an instruction for the data warehouse to have all of its clusters running regardless, almost always, you would want to set this to the ‘Auto-Scale’ mode. Here’s an example of how Auto scaling looks like –

As can be seen, you can set a bunch of parameters in a way that works best for you.

Features like Auto-scale and Auto-suspend provides flexibility for query execution as well as cost management. Let’s see how that works in the next section.

Pricing of Snowflake Data Warehouse

Snowflake has a fairly simple pricing model – charges apply to storage and compute aka virtual data warehouses. The storage is charged for every Terabyte (TB) of usage while compute is charged at a per second per computing unit (or credit) basis. Before getting into an example, it is worthwhile to note that Snowflake offers two broader pricing models –

- On-demand – Pay per your usage of storage and compute

- Pre-purchased – A set capacity of storage and compute could be pre-purchased at a discount as opposed to accruing the same usage at a higher cost via on-demand.

Now onto the usage pricing examples, the two popular on-demand pricing models available are as follows –

1. Snowflake Standard Edition

Storage costs you around $23 per TB and computes costs would be approximately 4 cents per minute per credit, billed for a minimum time of one minute.

2. Snowflake Enterprise Sensitive Data Edition

Being a premium version with advanced encryption and security features as well as HIPAA compliance, storage costs roughly the same while compute gets bumped to around 6.6 cents per minute per credit.

The above charges for compute apply only to ‘active’ data warehouses and any inactive session time is ignored for billing purposes. This is why it’s important and profitable to set features like auto-suspend and auto-scale in a way as to minimize the charges accrued for idle warehouse periods.

Top 30 frequently asked Snowflake Interview Questions !

Data Security & Maintenance on Snowflake Data Warehouse

Data security is dealt with very seriously at all levels of the Snowflake ecosystem.

Regardless of the version, all data is encrypted using AES 256, and the higher-end enterprise versions have additional security features like period rekeying, etc.

As Snowflake is deployed on a cloud server like AWS or MS Azure, the staging data files (ready for loading/unloading) in these clouds get the same level of security as the staging files for Amazon Redshift or Azure SQL Data Warehouse. While in transit, the data is heavily protected using industrial-strength secure protocols.

As for maintenance, Snowflake is a fully managed cloud data warehouse, end users have practically nothing to do to ensure a smooth day-to-day operation of the data warehouse. This helps customers tremendously to focus more on the front-end data operations like data analysis and insights generation, and not so much on the back-end stuff like server performance and maintenance activities.

Key Features of Snowflake Data Warehouse

Ever since the Snowflake Data Warehouse got into the growing cloud Data Warehouse market, it has established itself as a solid choice. That being said, here are some things to consider that might make it particularly suitable for your purposes –

- It offers five editions going from ‘standard’ to ‘enterprise’. This is a good thing as customers have options to choose from based on their specific needs.

- The ability to separate storage and compute is something to consider and how that relates to the kind of data warehousing operations you’d be looking for.

- Snowflake is designed in a way to ensure the least user input and interaction required for any performance or maintenance-related activity. This is not a standard among cloud DWHs. For instance, Redshift needs user-driven data vacuuming.

- It has some cool querying features like undrop, fast clone, etc. These might be worth checking out as they may account for a good chunk of your day-to-day data operations.

Advantages of Snowflake Data Warehouse

Here are the advantages of using Snowflake Data Warehouse as your data warehousing solution –

- Fully managed, cloud-deployed DWH requiring minimal effort to get set up and running.

- Easy to scale with a lot of flexible options (as discussed above).

- Storage and Compute can be separated. This is not common in the cloud data warehousing solutions space.

- Follows ANSI SQL protocol; supports fully structured as well as semi-structured data types like JSON, Parquet, XML, ORC, etc.

- Quite popular and successful with a large number of clients using the service, Snowflake competes in the same league as Amazon Redshift, Google BigQuery, etc.

Limitations of Snowflake Data Warehouse

Here are the limitations of using Snowflake Data Warehouse:

- The dependence that Snowflake has on AWS, Azure, or GCS can be a problem at times when there is an independent (independent of Snowflake operations’ ‘health’) outage in one of these cloud servers.

- Not know particularly for its customer support.

- Doesn’t have any provision to support unstructured data as of yet.

- Currently doesn’t have many options to work with geospatial data.

Is Snowflake a Data Lake?

Snowflake Data Warehouse delivers essential infrastructure for handling a Data Lake, and Data Warehouse needs. It can store semi-structured and structured data in one place due to its multi-clusters architecture that allows users to independently query data using SQL. Moreover, Snowflake as a Data Lake offers a flexible Query Engine that allows users to seamlessly integrate with other Data Lakes such as Amazon S3, Azure Storage, and Google Cloud Storage and perform all queries from the Snowflake Query Engine.

Why was the Company Called Snowflake?

One of the reasons why the company called Snowflake is that Snowflake has many edges in multiple directions. So as the Snowflake Data Warehouse offers virtual Data Warehousing allowing users to create and organize Data Warehouses just like dimensions tables surround fact tables. The architecture of Snowflake Data Warehouse resembles the Snowflake. Another reason for its name is that the early investors and founders love the winter season, and the name is given as a tribute to it.

Alternatives for Snowflake Data Warehouse

The shift towards cloud data warehousing solutions picked up real pace in the late 2000s, mostly thanks to Google and Amazon. Since then, so many traditional database vendors like Microsoft, Oracle, etc. as well as newer players like Vertica, Panoply, etc. have entered this space. Having said that, let’s take a look at some of the popular alternatives to Snowflake.

- Amazon Redshift

- Google BigQuery

- Azure SQL Data Warehouse

1. Amazon Redshift

The cloud data warehousing solution of one of the largest cloud providers (Amazon Web Services or AWS) in this domain that can work with Petabyte scale data. Supports fully structured as well as some semi-structured data like JSON, stored in columnar format. However, compute and storage are not separate like Snowflake. Generally, a costlier alternative to Snowflake but more robust and faster with optimizable tuning techniques like materialized views, sorting/distribution keys, etc.

2. Google BigQuery

Also, a columnar, structured data warehouse that is part of the Google Cloud Services suite. It has other features comparable to Amazon Redshift like MPP architecture. It can be easily integrated with other data vendors, etc. BigQuery is similar to Snowflake in the sense that storage and compute are treated separately, however, instead of a discounted, pre-purchase pricing model (as in Snowflake), BigQuery services are charged monthly/yearly at a flat rate.

3. Azure SQL Data Warehouse

Azure is gaining in popularity by the day and is especially known for performing analytics tasks. It is part of the Microsoft suite of products so there is a natural advantage for users and firms dealing with MS products and technologies like SQL Server, SSRS, SSIS, T-SQL, etc. Also, a columnar database, with storage and compute separated. Azure SQL engine is also known for its high level of concurrency.

Conclusion

As can be gathered from the article so far, the Snowflake Data Warehouse is a secure, scalable, and popular cloud data warehousing solution. It has achieved this status by constantly re-engineering and catering to a wide variety of industrial use cases that helped win over so many clients.

No comments: