MapReduce Algorithm Tutorial in Hadoop admin

Algorithm

Tasks in MapReduce Algorithm

In the MapReduce bulk tasks are divided into smaller tasks, they are then alloted to many systems. The two important tasks in MapReduce algorithm

- Map

- Reduce

Map task is always performed first which is then followed by Reduce job. One data set converts into another data set in map, and individual element is broken into tuples.

To learn complete tutorials visit:hadoop administration course

Reduce task combines the tuples of data into smaller tuples set and it uses map output as an input.

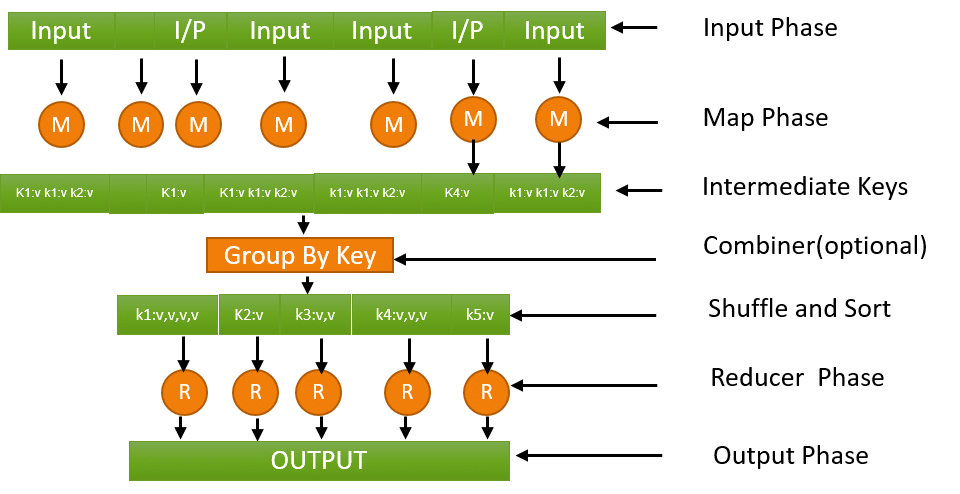

Input Phase: It is a record reader that sends data in the form of key-value pairs and transforms every input file record to the mapper.

Map Phase: It is a user defined function. It generates zero or more key-value pairs with the help of a sequence of key-value pairs and processes each of them.

Intermediate Keys: Mapper generated key-value pairs are called as intermediate keys.

Combiner: Combiner takes mapper internal keys as input and applies a user-defined code to combine the values in a small scope of one mapper.

Shuffle and Sort: Shuffle and Sort is the first step of reducer task. When reducer is running, it downloads all the key-value pairs onto the local machine. Each key -value pairs are stored by key into a larger data list. This data list groups the corresponding keys together so that their values can be iterated easily in the reducer task.

Reducer phase: This phase gives zero or more key-value pairs after the following process The data can be combined, filtered and aggregated in a number of ways and it requires a large range of processing.

Output phase: It has an output formatter, from the reducer function and writes them onto a file using a record writer that translates the last key-value pairs.

No comments: